Bayes Filter and Pre-Lab

We were provided with the Bayes Filter implementation file, lab11_sim.ipynb file that hows the Bayes Filter implementation in simulation, and lab11_real.ipynb file for communication between the real robot and the simulation.

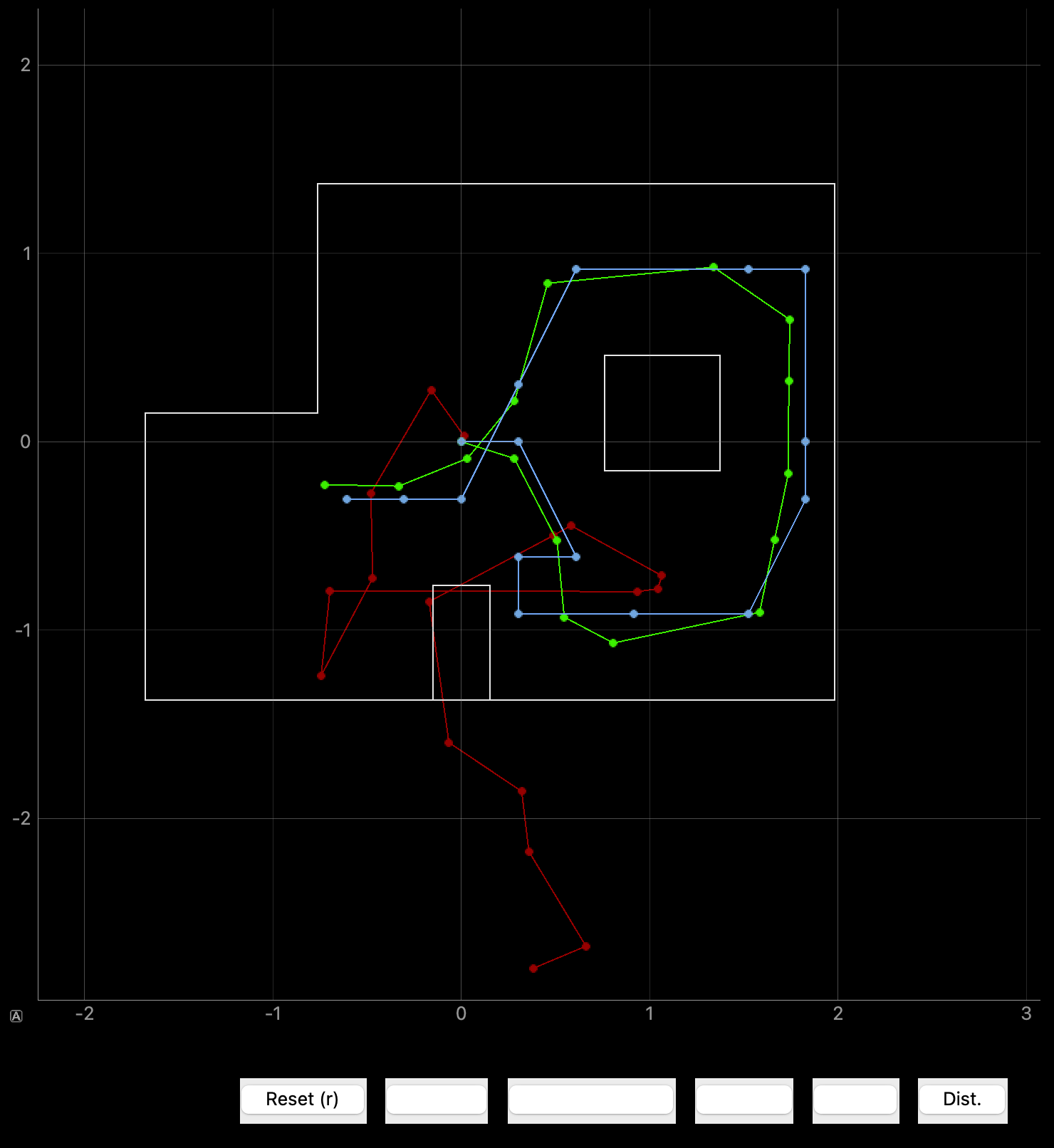

Simulation

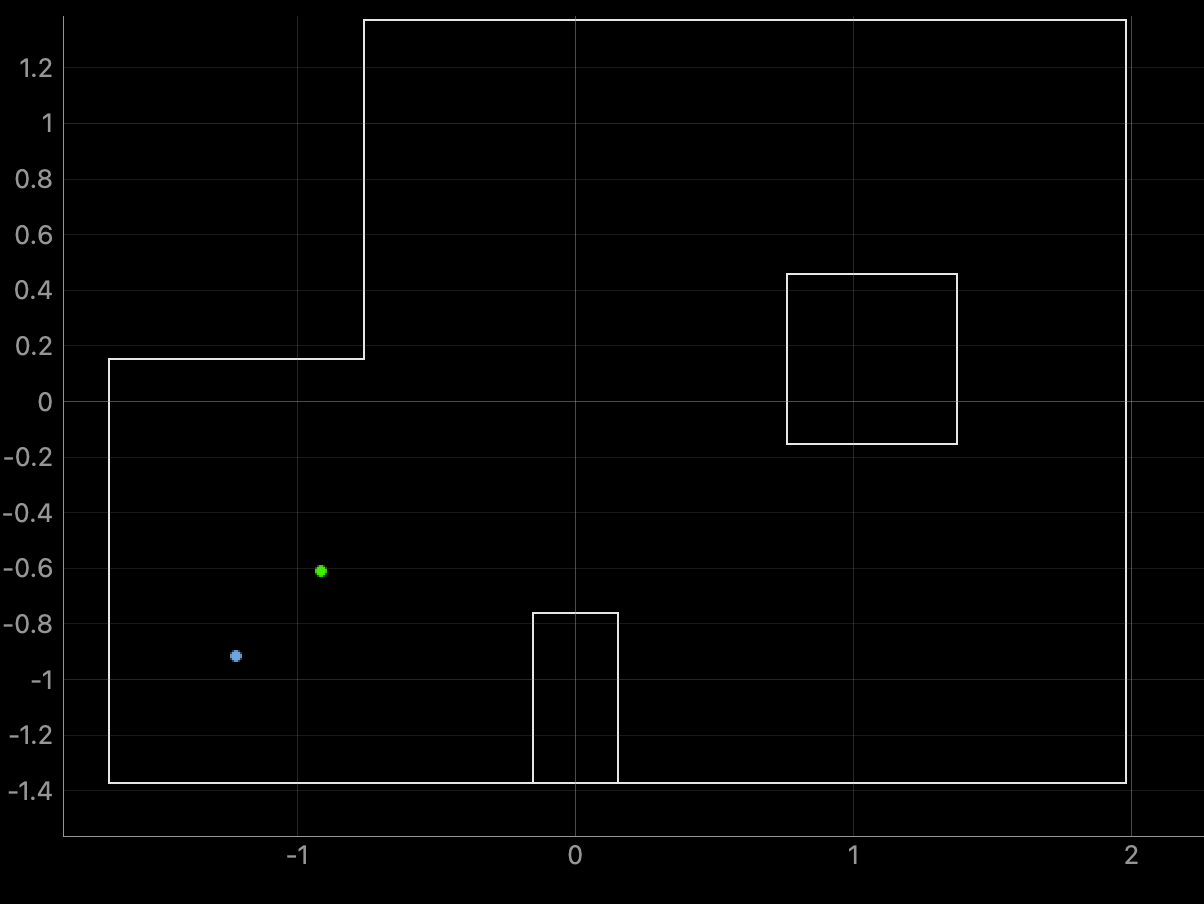

First, I used the virtual robot to test the Bayes Filter implementation that was provided in lab. Attached below is the result, with odom, ground truth and belief. It is very similar to the result that I got from lab 10, where the ground truth and the belief are not too far off.

Implementation of Bayes Filter in real robot.

Coding implementation

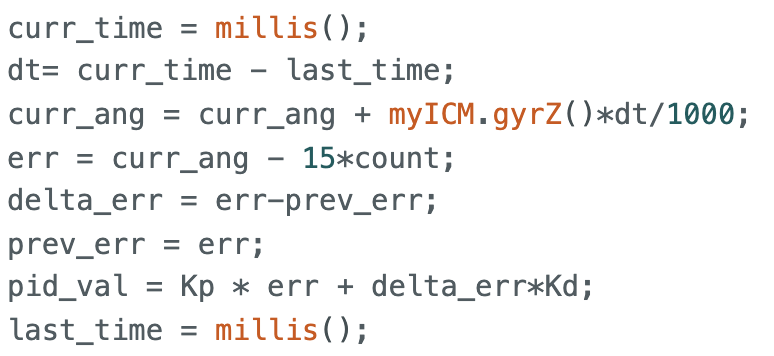

First, I implemented PD control for the angle to stop and measure distance every 20 degrees in rotation for the observation loop, so that in total I get 18 data points during a 360 degrees turn. Below are the code snippet of PD angle control and the video of the robot rotating in 20 degrees increments.

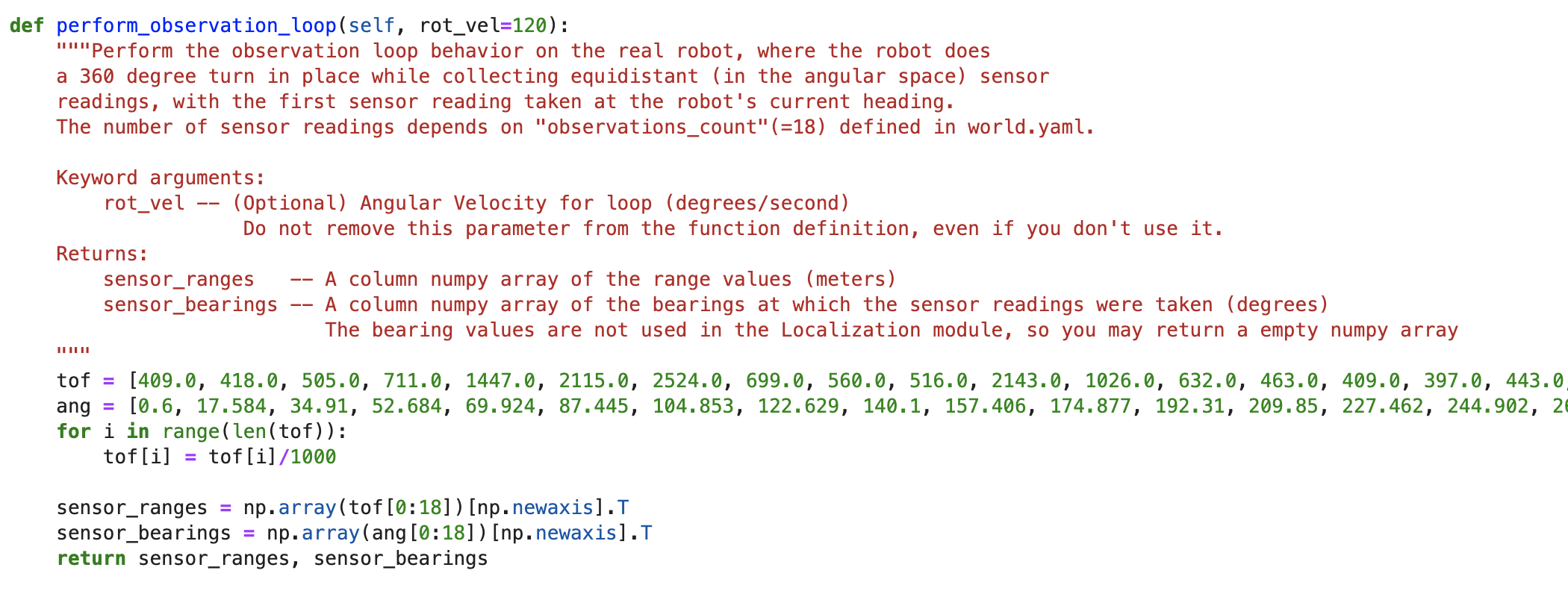

Next, we are going to get the data from the observation loop and use Bayes Filter provided to figure out our belief. One issue that I had was that when I tried to integrate my code for communicating to the robot within the perform_observation_loop function, it kept giving me an error. So, per Anya's advice, I opted to get the data separately and input the array of distance values directly as shown below.

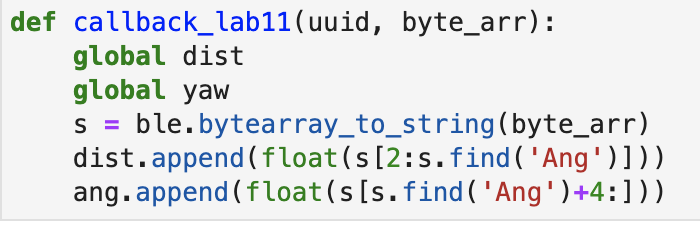

This is the callback function that was used to collect distance data after 18 20-degree turns. The measurements are initially stored in an array in the Artemis and sent when the 360 degrees turn is over.

Using the data retrieved with the callback function, the array of 18 measurments are changed to meters and returned in the perform_observation_loop like shown above.

Results

Running localization in (-3ft, -2ft, 0 deg)

This was the most unsuccessful run of the four points, since it had both translational (x and y) and angular error. This is due to inaccurate ToF sensor readings that we also saw in lab 9, where the further the distance readings are, the more inaccurate they are.

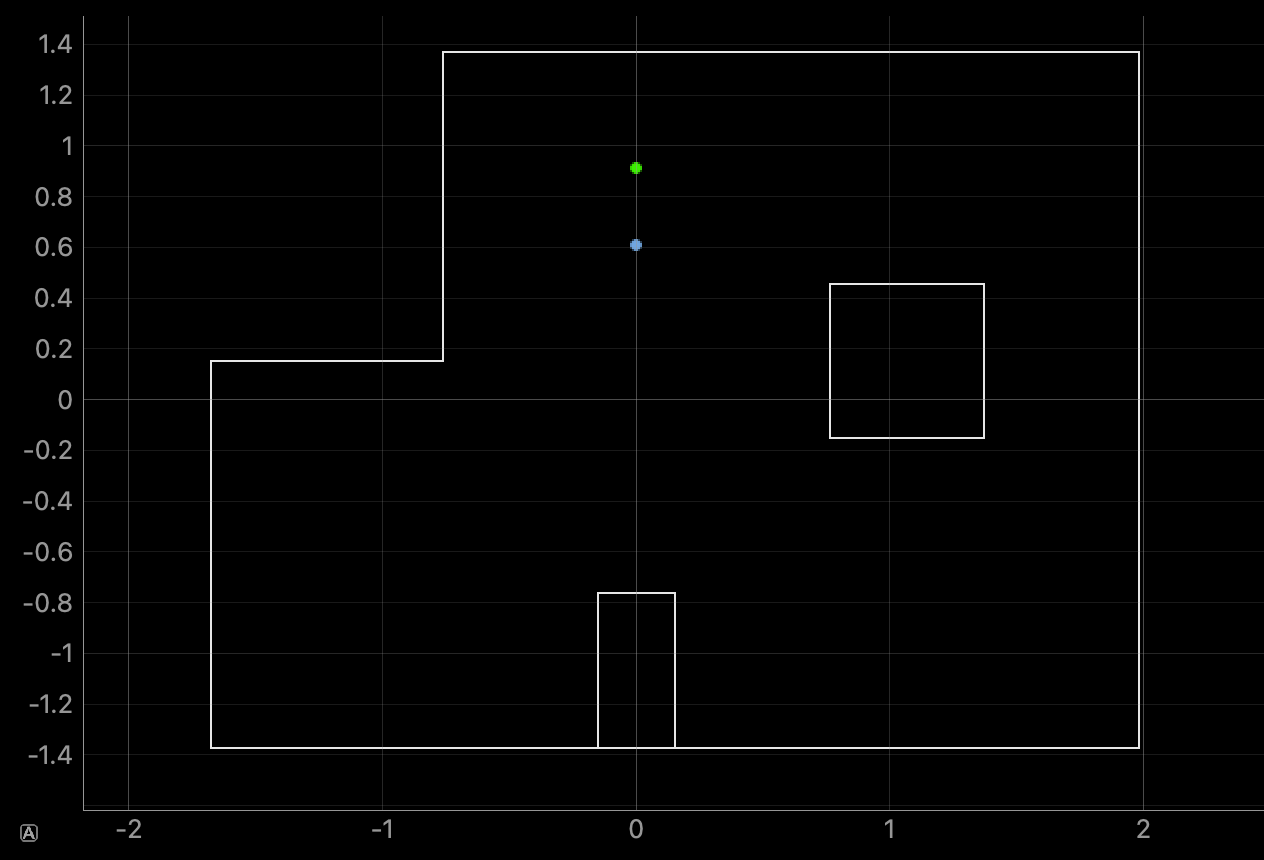

Running localization in (0ft, 3ft, 0 deg)

This localization had translational error in the y-axis and angular error of 20 degrees, just like the one above. The errors are probably due to the robot not being able to rotate in place. Also seen on the video, the robot sometimes drifts downwards due to unreliable and uneven motors.

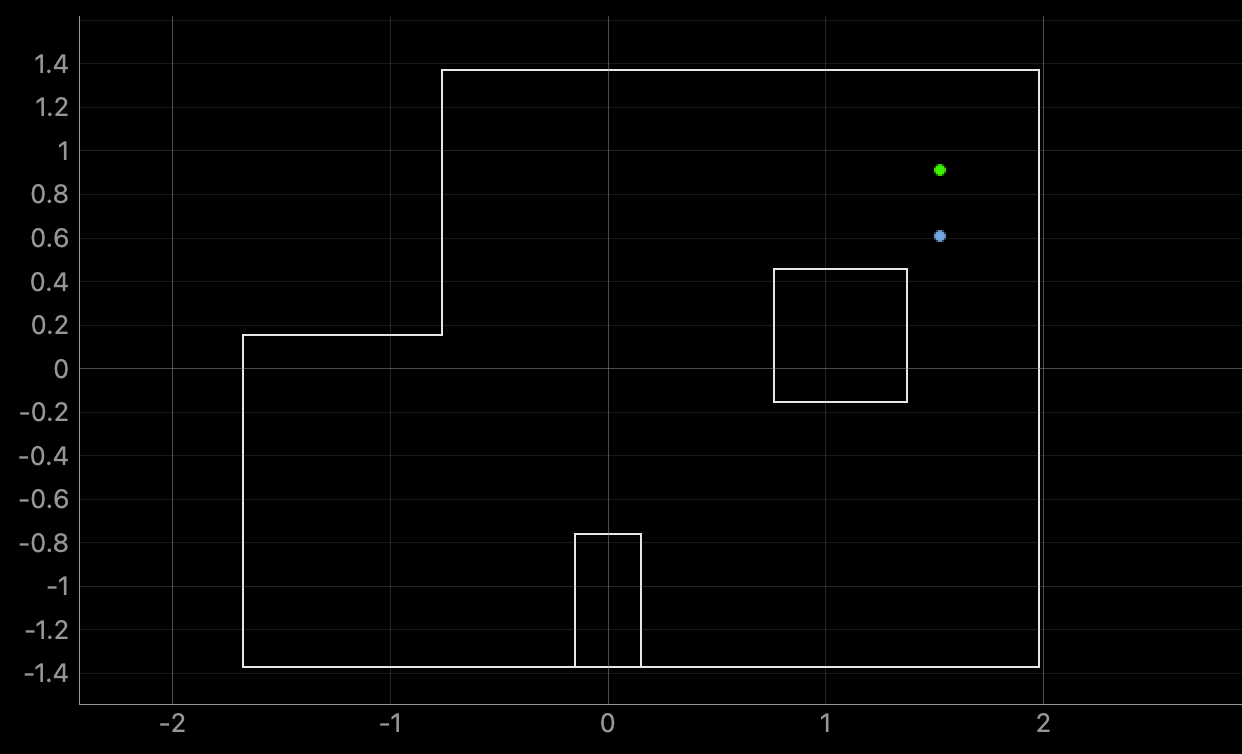

Running localization in (5ft, 3ft, 0 deg)

Again, this run had exactly the same error as the one above: y-translational error and angular error.

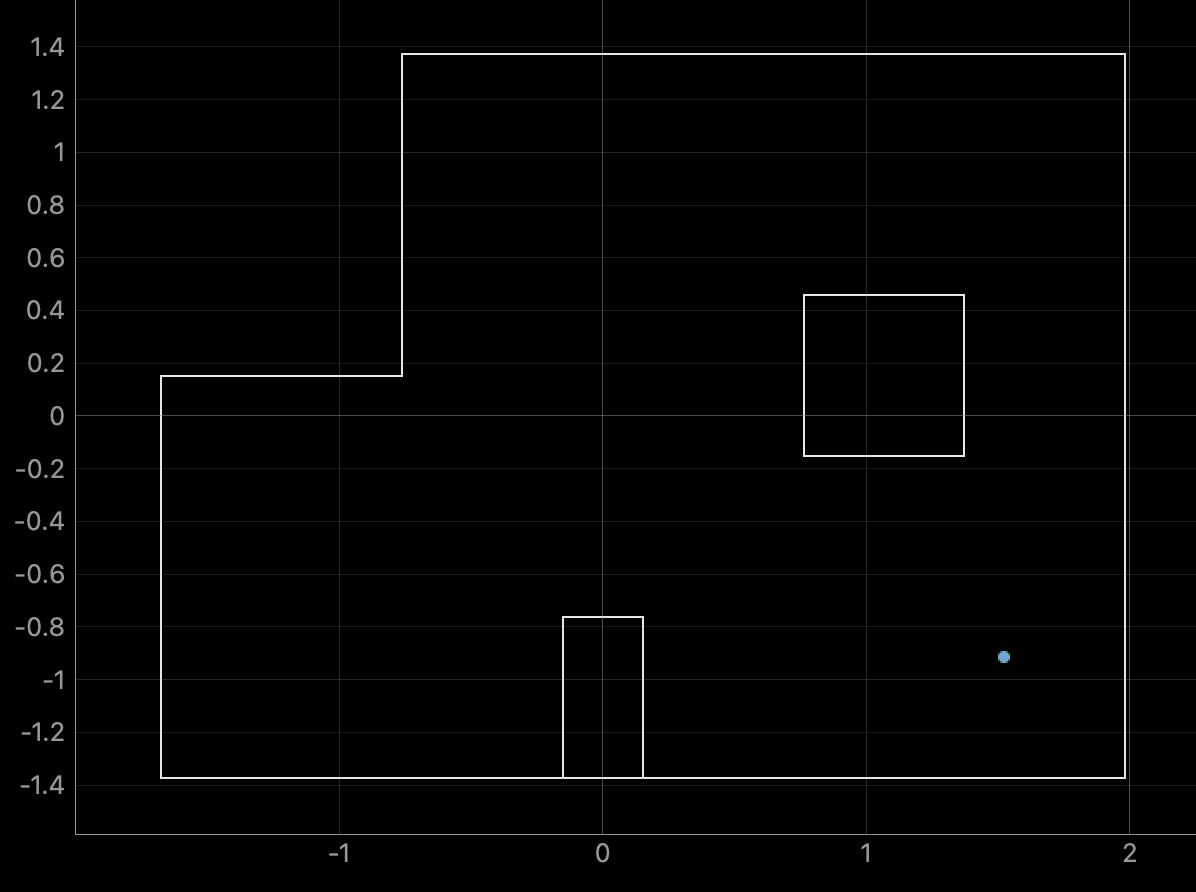

Running localization in (5ft, -3ft, 0 deg)

This run was the most successful localization out of the four points since the belief pose was exactly on the ground truth pose. (the green dot -- ground truth pose is under the blue dot.) I believe that the higher accuracy for this trial is due to more walls that are closer to the robot, so the ToF sensors had more accurate measurements.

Conclusion

With this lab, I found that due to the negative y-direction drift that my robot had during the localization rotation, the belief has the tendency to have lower y-values compared to the ground truth pose (for 3/4 localization runs). The most inaccurate one was on the left bottom corner of the arena, so I will probably not run localization in that part of the arena. Also, the x position belief seems pretty accurate, for most of the points. Except for the right bottom corner of the lab, the angle seems to be 20 degrees off, which is problematic considering that I might be 20 degrees off in heading if I want to implement this on the next lab.

Full code

Arduino:

Python:

I referenced Anya's website (2022) for this lab!